Chapitre 7. Managing Storage¶

Table des matières

When managing a VM Guest on the VM Host Server itself, it is possible to access

the complete file system of the VM Host Server in order to attach or create

virtual hard disks or to attach existing images to the VM Guest. However,

this is not possible when managing VM Guests from a remote host. For this

reason, libvirt supports so called « Storage Pools » which

can be accessed from remote machines.

![[Tip]](static/images/tip.png) | CD/DVD ISO images |

|---|---|

In order to be able to access CD/DVD iso images on the VM Host Server from remote, they also need to be placed in a storage pool. | |

libvirt knows two different types of storage: volumes and pools.

- Storage Volume

A storage volume is a storage device that can be assigned to a guest—a virtual disk or a CD/DVD/floppy image. Physically (on the VM Host Server) it can be a block device (a partition, a logical volume, etc.) or a file.

- Storage Pool

A storage pool basically is a storage resource on the VM Host Server that can be used for storing volumes, similar to network storage for a desktop machine. Physically it can be one of the following types:

- File System Directory ()

A directory for hosting image files. The files can be either one of the supported disk formats (raw, qcow2, or qed), or ISO images.

- Physical Disk Device ()

Use a complete physical disk as storage. A partition is created for each volume that is added to the pool.

- Pre-Formatted Block Device ()

Specify a partition to be used in the same way as a file system directory pool (a directory for hosting image files). The only difference to using a file system directory is the fact that

libvirttakes care of mounting the device.- iSCSI Target (iscsi)

Set up a pool on an iSCSI target. You need to have been logged into the volume once before, in order to use it with

libvirt. Volume creation on iSCSI pools is not supported, instead each existing Logical Unit Number (LUN) represents a volume. Each volume/LUN also needs a valid (empty) partition table or disk label before you can use it. If missing, use fdisk to add it:~ # fdisk -cu /dev/disk/by-path/ip-192.168.2.100:3260-iscsi-iqn.2010-10.com.example:[...]-lun-2 Device contains neither a valid DOS partition table, nor Sun, SGI or OSF disklabel Building a new DOS disklabel with disk identifier 0xc15cdc4e. Changes will remain in memory only, until you decide to write them. After that, of course, the previous content won't be recoverable. Warning: invalid flag 0x0000 of partition table 4 will be corrected by w(rite) Command (m for help): w The partition table has been altered! Calling ioctl() to re-read partition table. Syncing disks.

- LVM Volume Group (logical)

Use a LVM volume group as a pool. You may either use a pre-defined volume group, or create a group by specifying the devices to use. Storage volumes are created as partitions on the volume.

![[Warning]](static/images/warning.png)

Deleting the LVM Based Pool When the LVM based pool is deleted in the Storage Manager, the volume group is deleted as well. This results in a non-recoverable loss of all data stored on the pool!

- Multipath Devices ()

At the moment, multipathing support is limited to assigning existing devices to the guests. Volume creation or configuring multipathing from within

libvirtis not supported.- Network Exported Directory ()

Specify a network directory to be used in the same way as a file system directory pool (a directory for hosting image files). The only difference to using a file system directory is the fact that

libvirttakes care of mounting the directory. Supported protocols are NFS and glusterfs.- SCSI Host Adapter ()

Use an SCSI host adapter in almost the same way a an iSCSI target. It is recommended to use a device name from

/dev/disk/by-*rather than the simple/dev/sd, since the latter may change (for example when adding or removing hard disks). Volume creation on iSCSI pools is not supported, instead each existing LUN (Logical Unit Number) represents a volume.X

![[Warning]](static/images/warning.png) | Security Considerations |

|---|---|

In order to avoid data loss or data corruption, do not attempt to use

resources such as LVM volume groups, iSCSI targets, etc. that are used to

build storage pools on the VM Host Server, as well. There is no need to connect

to these resources from the VM Host Server or to mount them on the

VM Host Server— Do not mount partitions on the VM Host Server by label. Under certain circumstances it is possible that a partition is labeled from within a VM Guest with a name already existing on the VM Host Server. | |

7.1. Managing Storage with Virtual Machine Manager¶

The Virtual Machine Manager provides a graphical interface—the Storage Manager— to manage storage volumes and pools. To access it, either right-click a connection and choose , or highlight a connection and choose +. Select the tab.

|

7.1.1. Adding a Storage Pool¶

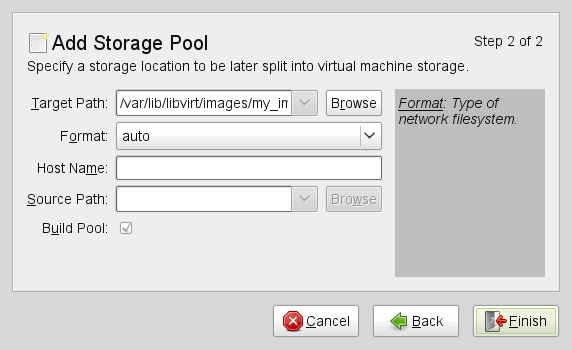

To add a storage pool, proceed as follows:

Click the plus symbol in the bottom left corner to open the .

Provide a for the pool (consisting of alphanumeric characters plus _-.) and select a . Proceed with .

Specify the needed details in the following window. The data that needs to be entered depends on the type of pool you are creating.

- Type

: Specify an existing directory.

- Type

: The directory which hosts the devices. The default value

/devshould fit in most cases.: Format of the device's partition table. Using should work in most cases. If not, get the needed format by running the command parted

-lon the VM Host Server.Path to the device. It is recommended to use a device name from

/dev/disk/by-*rather than the simple/dev/sd, since the latter may change (for example when adding or removing hard disks). You need to specify the path that resembles the whole disk, not a partition on the disk (if existing).XActivating this option formats the device. Use with care— all data on the device will be lost!

- Type

Mount point on the VM Host Server file system.

File system format of the device. the default value

autoshould work.Path to the device file. It is recommended to use a device name from

/dev/disk/by-*rather than the simple/dev/sd, since the latter may change (for example when adding or removing hard disks).X

- Type

Get the necessary data by running the following command on the VM Host Server:

iscsiadm --mode node

It will return a list of iSCSI volumes with the following format. The elements highlighted with a bold font are the ones needed:

IP_ADDRESS:PORT,TPGT TARGET_NAME_(IQN)

The directory containing the device file. Use

/dev/disk/by-path(default) or/dev/disk/by-id.Host name or IP address of the iSCSI server.

The iSCSI target name (IQN).

- Type

In case you use an existing volume group, specify the existing device path. In case of building a new LVM volume group, specify a device name in the

/devdirectory that does not already exist.Leave empty when using an existing volume group. When creating a new one, specify its devices here.

Only activate when creating a new volume group.

- Type

Support of multipathing is currently limited to making all multipath devices available. Therefore you may enter an arbitrary string here (needed, otherwise the XML parser will fail), it will be ignored anyway.

- Type

Mount point on the VM Host Server file system.

Network file system protocol

IP address or hostname of the server exporting the network file system.

Directory on the server that is being exported.

- Type

The directory containing the device file. Use

/dev/disk/by-path(default) or/dev/disk/by-id.Name of the SCSI adapter.

![[Note]](static/images/note.png)

File Browsing Using the file browser by clicking on is not possible when operating from remote.

Click to add the storage pool.

7.1.2. Managing Storage Pools¶

Virtual Machine Manager's Storage Manager lets you create or delete volumes in a pool. You may also temporarily deactivate or permanently delete existing storage pools. Changing the basic configuration of a pool is currently not supported by SUSE.

7.1.2.1. Starting, Stopping and Deleting Pools¶

The purpose of storage pools is to provide block devices located on the VM Host Server, that can be added to a VM Guest when managing it from remote. In order to make a pool temporarily inaccessible from remote, you may it by clicking on the stop symbol in the bottom left corner of the Storage Manager. Stopped pools are marked with and are grayed out in the list pane. By default, a newly created pool will be automatically started of the VM Host Server.

To an inactive pool and make it available from remote again click on the play symbol in the bottom left corner of the Storage Manager.

![[Note]](static/images/note.png) | A Pool's State Does not Affect Attached Volumes |

|---|---|

Volumes from a pool attached to VM Guests are always available, regardless of the pool's state ( (stopped) or (started)). The state of the pool solely affects the ability to attach volumes to a VM Guest via remote management. | |

To permanently make a pool inaccessible, you can it by clicking on the shredder symbol in the bottom left corner of the Storage Manager. You may only delete inactive pools. Deleting a pool does not physically erase its contents on VM Host Server—it only deletes the pool configuration. However, you need to be extra careful when deleting pools, especially when deleting LVM volume group-based tools:

![[Warning]](static/images/warning.png) | Deleting Storage Pools |

|---|---|

Deleting storage pools based on local file system directories, local partitions or disks has no effect on the availability of volumes from these pools currently attached to VM Guests. Volumes located in pools of type iSCSI, SCSI, LVM group or Network Exported Directory will become inaccessible from the VM Guest in case the pool will be deleted. Although the volumes themselves will not be deleted, the VM Host Server will no longer have access to the resources. Volumes on iSCSI/SCSI targets or Network Exported Directory will be accessible again when creating an adequate new pool or when mounting/accessing these resources directly from the host system. When deleting an LVM group-based storage pool, the LVM group definition will be erased and the LVM group will no longer exist on the host system. The configuration is not recoverable and all volumes from this pool are lost. | |

7.1.2.2. Adding Volumes to a Storage Pool¶

Virtual Machine Manager lets you create volumes in all storage pools, except in pools of

types Multipath, iSCSI, or SCSI. A volume in these pools is equivalent

to a LUN and cannot be changed from within libvirt.

A new volume can either be created using the Storage Manager or while adding a new storage device to a VM Guest. In both cases, select a and then click .

Specify a for the image and choose an image format (note that SUSE currently only supports

raw,qcow2, orqedimages). The latter option is not available on LVM group-based pools.Specify a and the amount of space that should initially be allocated. If both values differ, a

sparseimage file, growing on demand, will be created.Start the volume creation by clicking .

7.1.2.3. Deleting Volumes From a Storage Pool¶

Deleting a volume can only be done from the Storage Manager, by selecting a volume and clicking . Confirm with . Use this function with extreme care!

![[Warning]](static/images/warning.png) | No Checks Upon Volume Deletion |

|---|---|

A volume will be deleted in any case, regardless whether it is currently used in an active or inactive VM Guest. There is no way to recover a deleted volume. Whether a volume is used by a VM Guest is indicated in the column in the Storage Manager. | |

7.2. Managing Storage with virsh¶

Managing storage from the command line is also possible by using virsh. However, creating storage pools is currently not supported by SUSE. Therefore this section is restricted to document functions like starting, stopping and deleting pools and volume management.

A list of all virsh subcommands for managing pools and volumes is available by running virsh help pool and virsh help volume, respectively.

7.2.1. Listing Pools and Volumes¶

List all pools currently active by executing the following command. To

also list inactive pools, add the option --all:

virsh pool-list --details

Details about a specific pool can be obtained with the

pool-info subcommand:

virsh pool-info POOLVolumes can only be listed per pool by default. To list all volumes from a pool, enter the following command.

virsh vol-list --details POOLAt the moment virsh offers no tools to show whether a volume is used by a guest or not. The following procedure describes a way to list volumes from all pools that are currently used by a VM Guest.

Procédure 7.1. Listing all Storage Volumes Currently Used on a VM Host Server¶

Create an XSLT style sheet by saving the following content to a file, for example, ~/libvirt/guest_storage_list.xsl:

<?xml version="1.0" encoding="UTF-8"?> <xsl:stylesheet version="1.0" xmlns:xsl="http://www.w3.org/1999/XSL/Transform"> <xsl:output method="text"/> <xsl:template match="text()"/> <xsl:strip-space elements="*"/> <xsl:template match="disk"> <xsl:text> </xsl:text> <xsl:value-of select="(source/@file|source/@dev|source/@dir)[1]"/> <xsl:text> </xsl:text> </xsl:template> </xsl:stylesheet>Run the following commands in a shell. It is assumed that the guest's XML definitions are all stored in the default location (

/etc/libvirt/qemu). xsltproc is provided by the packagelibxslt.SSHEET="$HOME/libvirt/guest_storage_list.xsl" cd /etc/libvirt/qemu for FILE in *.xml; do basename $FILE .xml xsltproc $SSHEET $FILE done

7.2.2. Starting, Stopping and Deleting Pools¶

Use the virsh pool subcommands to start, stop or

delete a pool. Replace POOL with the pool's

name or its UUID in the following examples:

- Stopping a Pool

virsh pool-destroy

POOL![[Note]](static/images/note.png)

A Pool's State Does not Affect Attached Volumes Volumes from a pool attached to VM Guests are always available, regardless of the pool's state ( (stopped) or (started)). The state of the pool solely affects the ability to attach volumes to a VM Guest via remote management.

- Deleting a Pool

virsh pool-delete

POOL![[Warning]](static/images/warning.png)

Deleting Storage Pools Deleting storage pools based on local file system directories, local partitions or disks has no effect on the availability of volumes from these pools currently attached to VM Guests.

Volumes located in pools of type iSCSI, SCSI, LVM group or Network Exported Directory will become inaccessible from the VM Guest in case the pool will be deleted. Although the volumes themselves will not be deleted, the VM Host Server will no longer have access to the resources.

Volumes on iSCSI/SCSI targets or Network Exported Directory will be accessible again when creating an adequate new pool or when mounting/accessing these resources directly from the host system.

When deleting an LVM group-based storage pool, the LVM group definition will be erased and the LVM group will no longer exist on the host system. The configuration is not recoverable and all volumes from this pool are lost.

- Starting a Pool

virsh pool-start

POOL- Enable Autostarting a Pool

virsh pool-autostart

POOLOnly pools that are marked to autostart start will automatically be started in case the VM Host Server reboots.

- Disable Autostarting a Pool

virsh pool-autostart

POOL--disable

7.2.3. Adding Volumes to a Storage Pool¶

virsh offers two ways to create storage pools: either

from an XML definition with vol-create and

vol-create-from or via command line arguments with

vol-create-as. The first two methods are currently

not supported by SUSE, therefore this section focuses on the

subcommand vol-create-as.

To add a volume to an existing pool, enter the following command:

virsh vol-create-asPOOLNAME12G --format

raw|qcow2|qed--allocation 4G

Name of the pool to which the volume should be added | |

Name of the volume | |

Size of the image, in this example 12 gigabyte. Use the suffixes k,M,G,T for kilobyte, megabyte, gigabyte, and terabyte, respectively. | |

Format of the volume. SUSE currently supports

| |

Optional parameter. By default virsh creates a sparse image file that grows on demand. Specify the amount of space that should be allocated with this parameter (4 gigabyte in this example). Use the suffixes k,M,G,T for kilobyte, megabyte, gigabyte, and terabyte, respectively.

When not specifying this parameter, a sparse image file with no

allocation will be generated. If you want to create a non-sparse

volume, specify the whole image size with this parameter (would be

|

7.2.3.1. Cloning Existing Volumes¶

Another way to add volumes to a pool is to clone an existing volume. The new instance is always created in the same pool as the original.

vol-cloneNAME_EXISTING_VOLUMENAME_NEW_VOLUME--pool

POOL

7.2.4. Deleting Volumes from a Storage Pool¶

To permanently delete a volume from a pool, use the subcommand

vol-delete:

virsh vol-deleteNAME--poolPOOL

--pool is optional. libvirt tries to locate the

volume automatically. If that fails, specify this parameter.

![[Warning]](static/images/warning.png) | No Checks Upon Volume Deletion |

|---|---|

A volume will be deleted in any case, regardless whether it is currently used in an active or inactive VM Guest. There is no way to recover a deleted volume. Whether a volume is used by a VM Guest can only be detected by using by the method described in Procédure 7.1, « Listing all Storage Volumes Currently Used on a VM Host Server ». | |

7.3. Locking Disk Files and Block Devices with virtlockd¶

Locking block devices and disk files prevents concurrent writes to these resources from different VM Guests. It provides protection against starting the same VM Guest twice, or adding the same disk to two different virtual machines. This will reduce the risk of a virtual machine's disk image becoming corrupted as a result of a wrong configuration.

The locking is controlled by a daemon called virtlockd. Since it operates

independently from the

libvirtd daemon, locks will endure a crash or a restart of

libvirtd. Locks will even persist in the case of an update of the virtlockd itself, since it has the ability to

re-execute itself. This ensures that VM Guests do not

have to be restarted upon a virtlockd update.

7.3.1. Enable Locking¶

Locking virtual disks is not enabled by default on openSUSE. To enable and automatically start it upon rebooting, perform the following steps:

Edit

/etc/libvirt/qemu.confand setlock_manager = "lockd"

Start the

virtlockddaemon with the following command:rcvirtlockd start

Restart the

libvirtddaemon with:rclibvirtd restart

Make sure

virtlockdis automatically started when booting the system:insserv virtlockd

7.3.2. Configure Locking¶

By default virtlockd is configured

to automatically lock all disks configured for your VM Guests. The

default setting uses a "direct" lockspace, where the locks are acquired

against the actual file paths associated with the VM Guest <disk>

devices. For example, flock(2) will be called directly

on /var/lib/libvirt/images/my-server/disk0.raw when the

VM Guest contains the following <disk> device:

<disk type='file' device='disk'> <driver name='qemu' type='raw'/> <source file='/var/lib/libvirt/images/my-server/disk0.raw'/> <target dev='vda' bus='virtio'/> </disk>

The virtlockd configuration can be

changed by editing the file

/etc/libvirt/qemu-lockd.conf. It also contains

detailed comments with further information. Make sure to activate

configuration changes by reloading virtlockd:

rcvirtlockd reload

![[Note]](static/images/note.png) | Locking Currently Only Available for All Disks |

|---|---|

As of SUSE Linux Enterprise 11 SP3 locking can only be activated globally, so that all virtual disks are locked. Support for locking selected disks is planned for future releases. | |

7.3.2.1. Enabling an Indirect Lockspace¶

virtlockd's default configuration

uses a « direct » lockspace, where the locks are acquired

against the actual file paths associated with the <disk> devices. If

the disk file paths are not accessible to all hosts, virtlockd can be configured to allow an

« indirect » lockspace, where a hash of the disk file path is

used to create a file in the indirect lockspace directory. The locks are

then held on these hash files instead of the actual disk file paths.

Indirect lockspace is also useful if the file system containing the disk

files does not support fcntl() locks. An indirect

lockspace is specified with the file_lockspace_dir setting:

file_lockspace_dir = "/MY_LOCKSPACE_DIRECTORY"7.3.2.2. Enable Locking on LVM or iSCSI Volumes¶

When wanting to lock virtual disks placed on LVM or iSCSI volumes shared by several hosts, locking needs to be done by UUID rather than by path (which is used by default). Furthermore, the lockspace directory needs to be placed on a shared file system accessible by all hosts sharing the volume. Set the following options for LVM and/or iSCSI:

lvm_lockspace_dir = "/MY_LOCKSPACE_DIRECTORY" iscsi_lockspace_dir = "/MY_LOCKSPACE_DIRECTORY"